Design is like a box of chocolates…

Finishing that famous Forrest Gump sentence, we never really know what we are going to get with design. The reason is that there is so much more to good design than what meets the eye.

So how do we inform people up front what they are going to get? And even better, how do we inform designers of what the user experience of their design is going to be?

We are going to talk about electric kettles, although this article is not about that.

Instead, this article will introduce a formalised expert review method to evaluate the user experience of design - using electric kettles as a design case.

Let’s do it!

Although there are many subjective elements about the user experience, the field of human factors offers us a toolbox to objectively quantify a broad range of qualities that impact the user experience.

With the human factors toolbox, we can therefore evaluate design - like electric kettles.

Such evaluations can be thought of as similar to the more technical evaluations that we are used to from product consumer organisations. Such technical reviews empower consumers to make more informed choices - and manufacturers to benchmark their design to competitors.

Below is our four-step guide for how to evaluate UX design:

Assessing the user experience in four steps

In the following, we will detail the general approach of our four-step process and relate that to the specific case of electric kettles.

in just four steps

1: Task analysis

⬇

2: PCA analysis

⬇

3: Objective scales

⬇

4: Rating

NOTE: we do not necessarily expect you to be able to carry out this sort of analysis on your own afterwards. This will typically require experience within the design or human factors domain.

However, we do hope the article will provide you with an understanding of how critical elements of the user experience can be readily assessed and benchmarked to support both designers and consumers alike.

Step 1: Task analysis

The task analysis is the backbone of the analysis. It details the general use flow into a series of tasks. Each task is then again made up of a series of individual subtasks (also called steps or operations).

The task analysis should be a fair representation of what would constitute the majority of uses. In our task analysis of electric kettles depicted below, you can see the two main tasks and their induvial subtasks.

Two things to note about the task analysis:

This task analysis would not be fitting for more specific use scenarios where you, for instance, use a kettle that you can adjust to specific water temperatures. Here we just heat the water to the boiling point.

Not all subtask qualities are readily discernible in this task analysis overview. For instance, subtask 1.3, where you fill the kettle with water, includes elements like opening the faucet and paying attention to the water meter indicator in order not to overfill the kettle.

To assist in the task analysis it can be useful to identify all the functional design components that the user will interact with.

It is these “interaction elements” that will ultimately get a score relative to how well they support perception, cognition and action.

The five kettles we reviewed in the review example.

Step 2: PCA analysis.

The nitty gritty human factors details

This is there step where it gets very psychological.

PCA is an abbreviation for Perception, Cognition and Action. This analysis is a further detailing of each subtask in the task analysis and informs us what the design needs to support as smoothly as possible.

Each subtask hereby has three individual rating qualities we need to consider:

How well does the design support our senses (e.g., visual, auditory, haptic) in picking up the relevant information?

How well does the design support our understanding of what goes on and what to do?

How well does the design support an effortless

In order to carry out the PCA rating, we need objective scales that define how to assign specific scores. This is focus of step number three.

PCA components - kettle example

Perception

To turn on an electric kettle the button should visually (and audible) signal as clear as possible the “on state”. Some kettles light up very dimly whereas others light up the full body).

Cognition

The cognition compoents means thinking and explores for instance how potential misunderstadnings can occur. Are the functions of butttons logical to the user. Is the button on when up or do I need to press down to switch it on?

Action

The action components explords how easy or difficult it is to carry out the needed substaks. For instance, on some kettles the placing of the kettle on the stand can be done very freely with a minimum amount of precion handling. On other kettles this same placement requires a precise placement to fit a very specific orientation.

▶︎ You can see examples of these qualites in the results slides belov in section four.

Step 3: Objective scales

For each subtask, we need an objective scale to quantify a good and a bad score for both perception, cognition and action. Note that for some subtasks, not all three components may be applicable. For instance, if a subtask does not require any action as such.

In the case example with electric kettles, the scales should help us quantify design qualities like these examples below:

Perception: How clearly can I tell the kettle is switched on? Here all three senses come into play. Most buttons have a mechanical “haptic” sense, an auditory click and a visual indication.

Cognition: Do the interaction elements clearly live up to my expectations. Although an electric kettle is a pretty straightforward design, it may not be readily clear how the lid functions in order to open it. Likewise, buttons may move the other way than expected (e.g., press down to turn on versus lift up“.

Action: How easy is it to handle the kettle? Does the handle support a robust power grip - especially when the kettle is filled with boiling water?

When we define the objective scales, a requirement is that they are clearly defined. Ideally, two persons should be able to reach a similar rating using the scales (this is formally called “interrater agreement”).

Our five-point rating scale

When we work with expert rating scales, we need a resolution that is practically manageable. Scales may differ relative to the type of design that is reviewed. In this case example, with electric kettles, we use a five-point scale.

Here is the logic of that scale.

For a product like an electric kettle, you can make user errors and even risk causing harm to yourself (or somebody else). Therefore, the scale includes two lower-end scores of 1 and 2. These scores reflect what can we can argue is a design fail - something that should have been fixed. You should not buy products with design fails.

This leaves us with a gradient of three scores for us to grade the quality of the design:

The best score (5) is when the design supports optimal perception, cognition and action across all subtasks (and use scenarios - e.g. boiling water for pasta or a cup of tea).

The second-best score (4) is when we have overall good design but with room for some improvement.

The third best score (3) is when we have substantial problems within one or more subtasks.

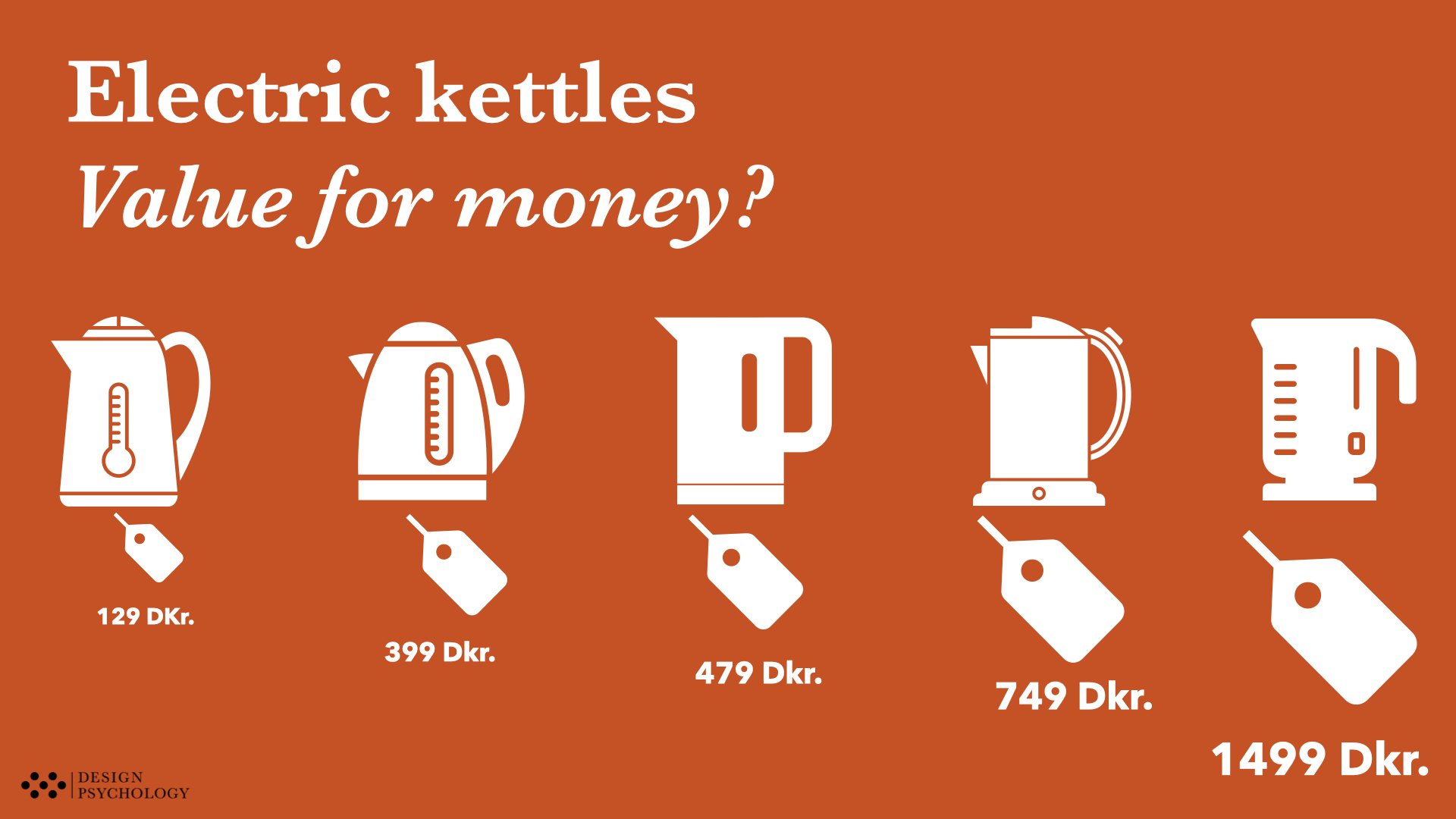

The picture below depicts the perceptual qualities and their corresponding grade related to the on/off button and indicator light.

Scores 1 and 2: when design can cause errors and harm 😠⚠️

In the rating table, we also have scores 1 and 2. Score 2 is when a design can cause a user error. This could be accidentally spilling water when pouring from the kettle.

Some design qualities may further lead to the risk of harm to the user. Below is an example of how poor handle ergonomic may cause the user to get burned on the boiling hot kettle. Hereby the score 1 is assigned. This example is illustrated in the picture below.

You can see more examples of design fails (score 1 and 2) in the results slides in the section “Step 4 - Rating”.

From PCA scores to subtask and grouped task scores

When we work with objective rating scales, we want to have a very detailed resolution of what design qualities are causing problems. At the same time, we want to communicate our findings as simply as possible by having fewer individual scores.

To have both qualities, we work with PCA-level scores for each subtask, and we combine these into both subtask scores and grouped subtask scores.

Here is how that is done:

First, we assign a score at the PCA level to each subtask.

Second, the PCA scores are combined into a score for that subtask,

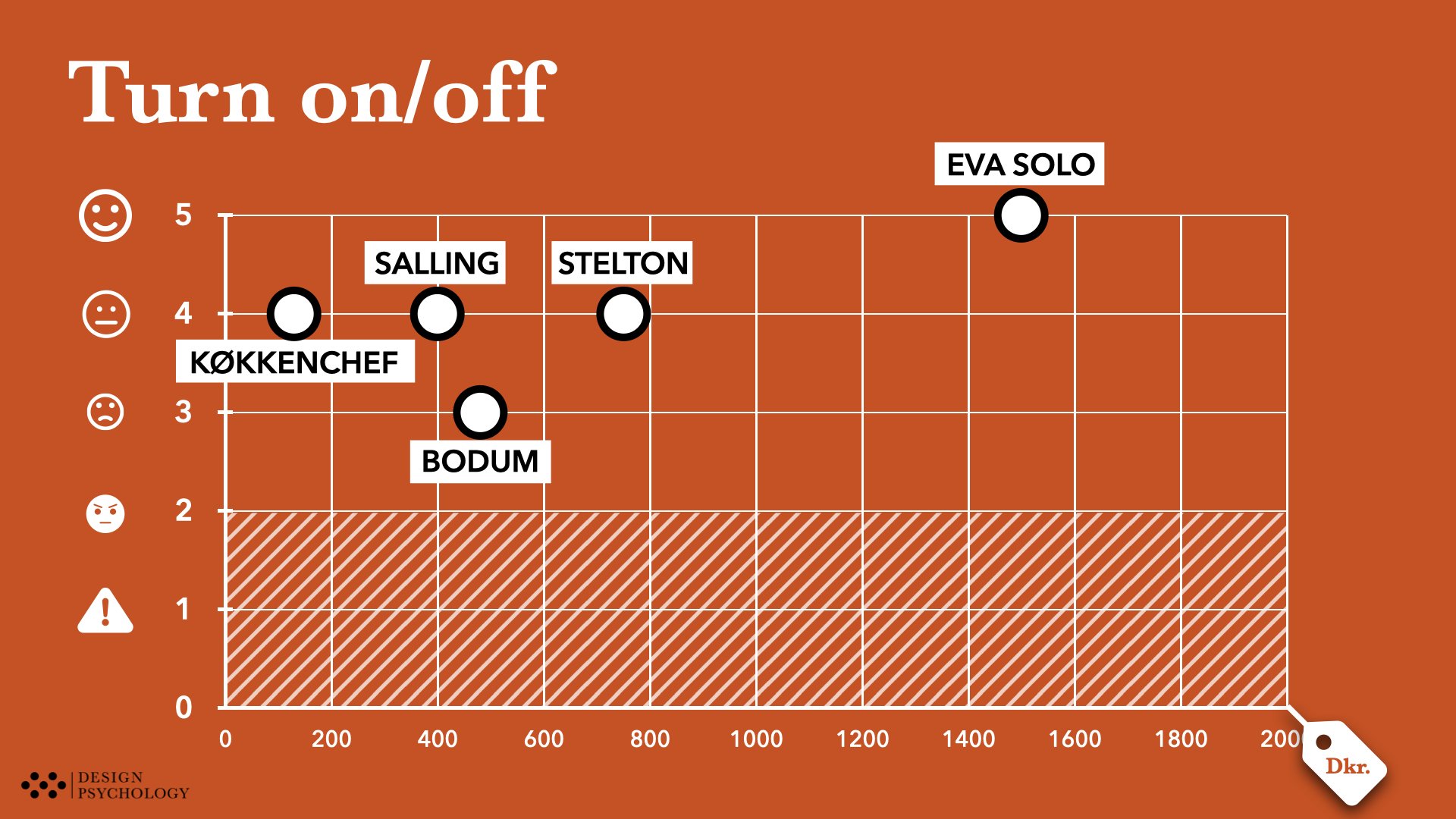

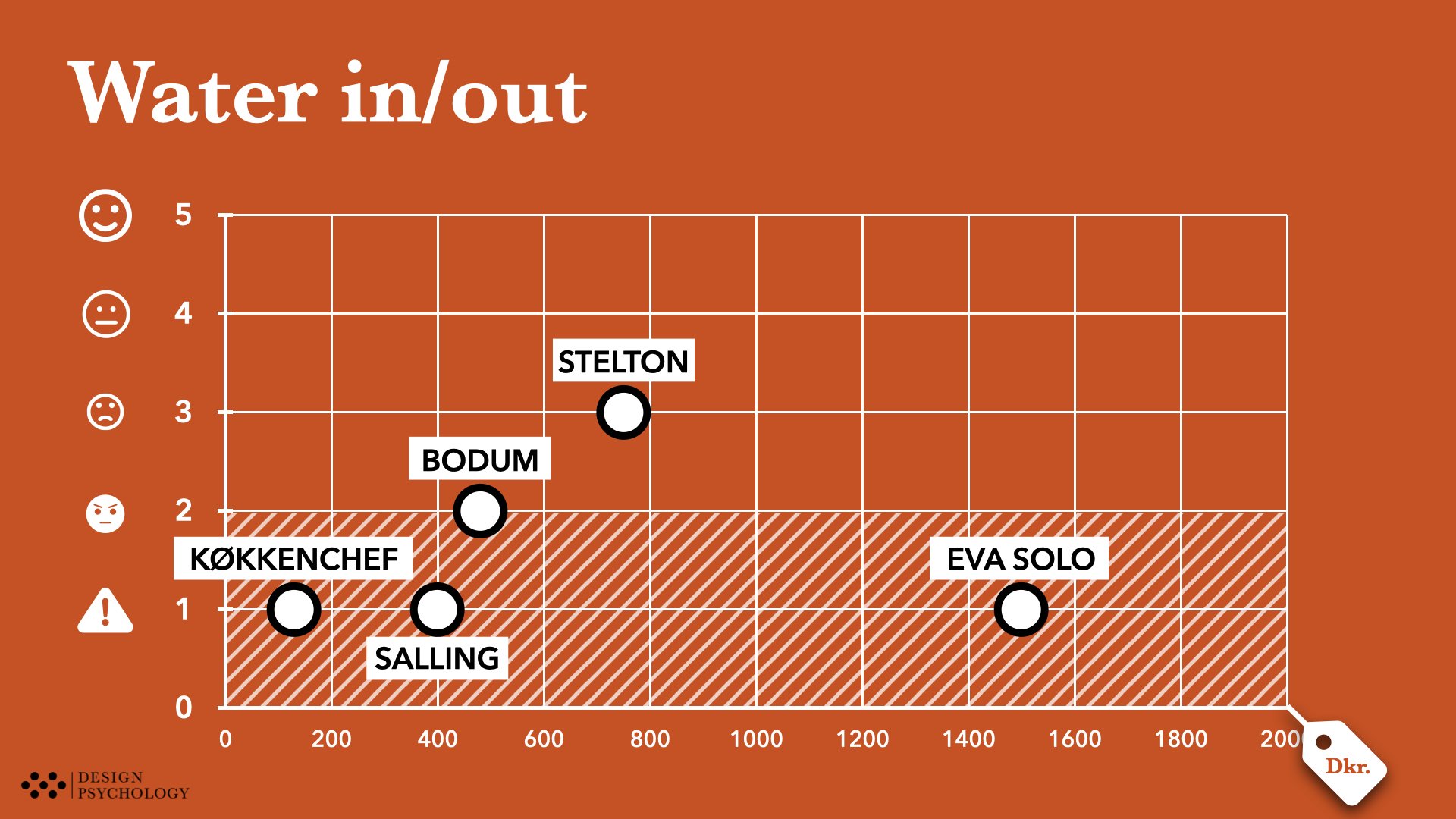

Third, we can combine subtask scores into task scores. These can either follow the structure of the task analysis - or we can group them based on the design elements the subtasks belong to. In the kettle case, we chose to group based on design elements. These are 1) Turn on/off, 2) General Handling, and 3) Water in/out. The result slides, therefore, contain three graphics for each of the three subtask group scores.

What to with “deal-breakers” in a group of scores?

If a group of subtask scores contain a design fail score (either 1 or 2) then that whole task group score fails. We call these “deal-breakers” or “loser takes all”.

In practical terms, this means that in our results depict that design-fail score of 1 and 2 as the result for the entire group of results, irrespective that some of the subtasks scores in that group may have a 3, 4, or even 5. In short, the looser takes all because it is a deal-breaker to have something where you risk making errors or cause harm in the design.

In the example below, you can see how poor handle ergonomy can drive the risk of harm to the user.

Step 4: Rating

The full assessment tool is now set up, and we can start to rate each of the individual designs included in the product test.

This part will clearly demonstrate if the rating scales are clearly defined enough or whether they require more detailing. Ideally, two persons should be able to reach a similar rating using the scales (this is formally called “interrater agreement”).

Below is a high-level visual overview of all five electric kettles and how they performed on the two tasks and their subtasks. It clearly shows that there are multiple design fails scattered across the face products. Likewise, it also tells us that there are excellent design elements equally scattered across the five kettles.

We will discuss these findings from a general design perspective in our learnings below.

Results from the electric kettle design review and what we learned about electric kettles

The results from the design review of the five electric kettles are presented in the slides below.

Review learnings

To use the Forrest Gump analogy, the analysis revealed that there are definitely some chocolates you would like to avoid. Also, the price is not a good indicator of quality. …

Learning 1:

There is no one perfect kettle (in our sample)

There is no clear winner among the fiver kettle since they all have critical design flaws that simply should not be there. No matter the price point. Of course, you could argue that you as a consumer will feel extra disappointed when you experience design fails with a premium-priced product.

This realisation during our review gave rise to the term “Franken Kettle” to denote that there are really good qualities represented in each kettle design.

This translates into a design opportunity to design a new electric kettle where all the design elements integrate the best of the best.

The “Franken-Kettle”

Learning 2:

Try out the product before you buy it

There are really critical elements to the user experience of electric kettles that first reveal themselves when you try them out.

Preferably, you should try them out in a realistic manner - handling, pouring water in and out, turning them on and off.

With online shopping this is of course not possible. That brings us to the next learning.

Learning 3:

Consumer organisations need to review UX

The technical features of products only tell half the story about the usefulness of any product. The quality-in-use and usability are very important aspects that impact the user experience. Therefore consumer orgsanaitons should start to review those qualities in more detail. In that way, consumers would have a much more qualified basis for choosing the right product - and avoiding unpleasant experiences.

We also think that retailers should start to critically assess the products they feature in their shops. Especially shops that feature premium products should be much more critical about what user experiences they are selling to their customers.

Learning 4:

Back to design school

Many of the design problems uncovered in this review are not exotic or complex in nature. They represent straightforward design qualities that shouldn’t be hard to get right. The complementary nature of the products, where we see that all design features actually score 4 or 5 in one kettle or another, is a clear testimony to that. In other words - all design features are designed right across the kettles. However, no one kettle gets them all right.

Industrial designers, therefore, seem to miss out on having a systematic approach to design where all qualities are catered for with equal attention to detail. At least when it comes to the human factors qualities this review has in focus.

If you are a designer and would like to boost your skills in understanding how to optimise your design for human perception, cognition and action then we suggest that you attend our UX Campus course: UX Expert Design Tools. You can read more about the three-day course here:

Let’s review your design

….

LIN TO UX DESIGN REVIEW PAGE